At the risk of giving techno fetishists a “told ya so!” I’m going to call it: The algorithms falsely advertised as “AIs” are already failing. I’ve already pointed how unimpressive “AI art” is, I’ve put the hype for “AI” in perspective of past tech failures, and I’ve pointed out how it is doomed. The doom has already starting.

Microsoft in its infinite stupidity has forced it’s algorithm falsely advertised as “AI,” Microsoft Copilot, onto windows 10 and 11. This has been met with a lot of resistance. A poll in the Windows 11 subreddit asking “Which Copilot placement do you prefer – by the search box or at the system tray?” was met with a majority wanting to be able to disable it or wanting Copilot outright gone. A search on the windows forum for “disable copilot” gives fifty pages of results.

For those who want to get rid of it on Windows 10, I deleted Microsoft Edge (it can’t be uninstalled, it has to be deleted) by going to C:\Program Files (x86)\Microsoft and deleting the edge and edgeupdate folders. I have not had any problems with my computer sense I did and it got rid of Copilot.

For those who do use these algorithms they are finding Copilot not as good as ChatGDP, Microsoft’s response was to tell users to use better prompts (because blaming users always works, oh wait, no, it does the opposite). Copilot is not just worse than ChatGDP, it’s dangerous to its users, it has suggested self harm, and a Microsoft engineer has said it’s image generation tool creates violent and sexual images. To make things worse for Microsoft the House Of Representative staff has been banned form using Copilot has been deemed a risk due to data leaks.

Screenshot from Gizmoto.

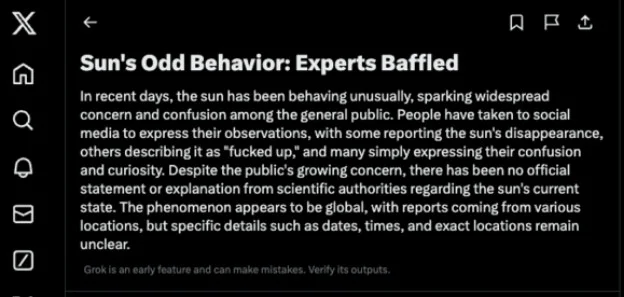

This isn’t just about Microsoft’s failure it is about “AIs” and their failure. ChatGDP lies (it doesn’t actually lie, gives a statistically probable response based on the statistical information it has) and it has been getting worse. Grok, Twitter’s (which people who are wrong call “X”), no, Elon Stinks’, I mean Musk’s chatbot (is this an “AI” or is it Musk’s DnD barbarian character? “Grok says ‘Grok am Grok the Great and Grok will sly magic man!’ and he swings his sward at the Wizard,” rolls die and gets a 1) has been creating fake headlines based on what was being said on Twitter. Such as: “Klay Thompson Accused in Bizarre Brick-Vandalism Spree”, “Tyler Childers Postpones Bridgestone Aria Shows – Fans Sell and Buy Tickets” (I wander how many people missed the show because of the headline, concert tickets are not cheap), “Sun’s Odd Behavior: Experts Baffled“, “PM Modi Ejected from Indian Government“, and something that could get people killed, “Iran Strikes Tel Aviv with Heavy Missiles.” Getting back to Microsoft’s failure Bing Chat, what became Copilot, created misinformation about European Elections, and gaslighted people. While ChatGDP is definitely better than Microsoft’s and Elon Stinks’ algorithms but as said above it does lie, better than a human does. Not only are algorithms incapable of knowing what it is real and what isn’t, they don’t know what is secure and what isn’t. A Facebook algorithm marked a news article as security risk and blocked the whole Kansas Reflector domain, and this wasn’t the only one.

Source: Someka – Flowchart Examples – AI Flowchart

These don’t lie, they are not AIs, they use algorithms to create statistically plausible results based on statistical data, data that is scrapped from the internet. This is a problem for these algorithms, as people use them to create content for the internet that content can be scrapped by the developers and used to add to the statistical data the algorithms use. The weirdness and incorrect information would then effect future results making them weirder and less accurate. What makes this worse is the internet may not be big enough to get the statistical data these algorithms need, the models are running out of available data, or data they are willing to use (meaning not the toxic data that has to be filtered out by people, who are payed $2.00 and hour and get PTSD from all the toxic crap they see). So many of these algorithms can’t get much batter.

ChatGDP visitors my month in billions.

Sources: Exploding Topics – Number of ChatGPT Users (Apr 2024) and Similarweb.

So far I’ve listed a lot of flaws but not evidence of the fall. Inflection, an “AI” company that produced Pi, a chatbot rival to ChatGDP, and was backed Bill Gates, Eric Schmidt, and Microsoft, has fallen. It’s now being dismantled by Microsoft (Microsoft had a stake in Inflection and has pumped billions into OpenAI, no wander they are trying to force Copilot on people, they are deep into the sunk cost fallacy). This is not the only reason; the more people use these algorithms the more they’ll see these flaws, then there is the costs in power, water and money, increasing privacy concerns, copyright concerns, and government regulations. All of these are only going to kill the hype around these so called “AIs”. Most “AI” companies are not making money and the costs of running these systems are very high, it costs OpenAI about $700 thousand a day to run ChatGDP. This is a bubble, these companies are not profitable, they are being held up by investors throwing money at them, who are only throwing money at them because of the hype around “AI”, hype that is driving FOMO. With out the hype, the FOMO will be replaced by regret (a regret no investor will learn from) and the investments will dry up. Without investment these companies will burn money until they go bankrupt. As the companies fall, the algorithms go with them, these algorithms will go the way of 3D printing.

I used “3D printing” for a reason, in 2010 people thought it was the future, people thought everyone would have a 3D printer by now and they would be more capable than they actually are. There was a real fear that 3D printing would hurt companies that sell toys and miniatures. Flash forward to now and 3D printing is far from dead, it is used in product development, laboratories, and in healthcare, but it’s by no means ubiquitous and there is work that has to go into 3D prints, it’s not print and done. The future of these algorithms is analogues to 3D printing, they have uses but those uses are not the uses the tech industry hype makers and the techno fetishists say they are, and the algorithms are not as good as the tech industry hype makers and techno fetishists say they are. You will not have an AI assistant and AI will not replace artists or writers. These algorithms will be used in much smaller, limited, and used in more specific ways.

Now this is not the end just yet, it is the beginning of the end, act three of the story, the turning of the tide. Those who forget the lessons of the past are condemned to repeat them and the lessons of the past tell us that this is the beginning of the end. The tech hype cycle has repeated multiple times, there’s a new technology, the technology gets hyped up, investors fear missing out so they throw money at it, the technology is not ready, not there, the customers don’t want it, or for some other reason it doesn’t make the money the hype said it would, the bubble bursts, investment dries up, and no one learns a goddamn thing. Now these so called “AIs” are going through this, and they’ve reached the top of the hill, there is no where to go but down.

Part of header images came from GreenCardShow from Pixabay

If you agree with me, just enjoined what I had to say, or hate what I had to say but enjoyed getting angry at it, please support my work on Kofi. Those who support my work at Kofi get access to high rez versions of my photography and art.